Configuring CentOS 7 to finetune EleutherAI GPT-NEO 2.7B parameters with Torch, Transformers and Deepspeed

State of open source artificial intelligence for text generation

Table of Contents

I like the idea of EleutherAI, to provide free AI to everyone. Since Microsoft has bought an exclusive license for GPT-3 from OpenAI, they decided to give developers access only to developers they chose and I believe that monopolies are not good for progress.

My theory was that if you finetune the GPT-Neo 2.7B model with a specific theme (for example, SEO), you could get the same quality as GPT-3 on that specific theme for spoken language text generation.

We don’t take any responsibilities for this guide’s accuracy or any damages to your server, use at your own risk.

Issues with the configuration of GeForce RTX 3090 to work with GPT

I tried to use this package – https://github.com/Xirider/finetune-gpt2xl with Geforce RTX 3090 that I rented for a day, it took me 7 hours to be able to finetune the model, so I decided to write a short guide on the steps needed.

The main issue was that GeForce RTX 3090 requires Cuda 11 to work when using the default version from PIP, it fails, or when using Cuda 11.2, which is the latest, the Torch packages don’t support it, so we must use and compile Cuda 11.0

Install GCC 7 on Centos 7

CentOS 7 comes with GCC 4 when we run:

gcc --version

We get:

gcc (GCC) 4.8.5 20150623 (Red Hat 4.8.5-44)

The problem is that GCC 4 doesn’t support C++14. These are the steps to install GCC 7:

-

yum install centos-release-scl

-

yum install devtoolset-7

-

scl enable devtoolset-7 bash

Now when checking for the version, we get:

gcc (GCC) 7.3.1 20180303 (Red Hat 7.3.1-5)

One thing to keep in mind that if we log out, GCC 4 will become the default version again, so we should run step #3 to make GCC 7 the default compiler.

Installing Miniconda on CentOS 7

We need Miniconda to later compile Anaconda and Torch/Cuda for Python, the steps to take:

-

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

-

chmod +x Miniconda3-latest-Linux-x86_64.sh

-

./Miniconda3-latest-Linux-x86_64.sh

There are many questions, answer yes to all, and then reconnect the shell for the path settings to take effect. (remember GCC? You’ll need to run step #3 in the GCC install)

Installing Nvidia Cuda 11.0 on CentOS 7

It’s essential to install this version because, as of April 2021, Torch can’t be compiled with Cuda 11.2, and I got this error when I tried it:

Installed CUDA version 11.2 does not match the version torch was compiled with 11.0, unable to compile Cuda/CPP extensions without a matching Cuda version.

The instructions are straight forward and are taken from here: https://developer.nvidia.com/cuda-11.0-download-archive?target_os=Linux&target_arch=x86_64&target_distro=CentOS&target_version=7&target_type=rpmlocal

-

wget http://developer.download.nvidia.com/compute/cuda/11.0.2/local_installers/cuda-repo-rhel7-11-0-local-11.0.2_450.51.05-1.x86_64.rpm

-

rpm -i cuda-repo-rhel7-11-0-local-11.0.2_450.51.05-1.x86_64.rpm

-

yum clean all

-

yum -y install nvidia-driver-latest-dkms cuda

-

yum -y install cuda-drivers

Installing Nvidia drivers on CentOS 7

This guide assumes that there are no drivers installed.

You can check that there are no drivers installed by calling:

lshw -class display

This is the output from another server we rented (Geforce 1080TI this time):

description: VGA compatible controller product: GP102 [GeForce GTX 1080 Ti] vendor: NVIDIA Corporation physical id: 0 bus info: pci@0000:82:00.0 version: a1 width: 64 bits clock: 33MHz capabilities: pm msi pciexpress vga_controller cap_list configuration: latency=0 resources: iomemory:387f0-387ef iomemory:387f0-387ef memory:f8000000-f8ffffff memory:387fc0000000-387fcfffffff memory:387fd0000000-387fd1ffffff ioport:e000(size=128) memory:f9000000-f907ffff

As you can see, no driver is installed.

nouveau driver installed on Centos 7

There are cases that there’s a default driver installed, it will look like this:

capabilities: pm pciexpress msix bus_master cap_list

configuration: driver=nouveau latency=0

resources: irq:10 memory:fd000000-fdffffff memory:e0000000-efffffff memory:f0000000-f1ffffff

To disable it:

-

Run: nano /etc/default/grub -

Add rd.driver.blacklist=nouveau nouveau.modeset=0 to the line GRUB_CMDLINE_LINUX

-

so the final line will look this: GRUB_CMDLINE_LINUX="rd.driver.blacklist=nouveau nouveau.modeset=0 console=tty0"

-

Regenerate grub: grub2-mkconfig -o /boot/grub2/grub.cfg

-

Add to the file: /etc/modprobe.d/blacklist.conf then entry: blacklist nouveau

-

Reboot: reboot now

Disable X server on Centos 7

If you have an X server, you will need to disable it before installing by calling:

systemctl isolate multi-user.target

Installing the NVIDIA driver

You will need to install some perquisites:

yum install epel-release

yum install kernel-devel dkms

To install the driver, download the file from Nvidia, and run it. It will ask many questions and answer yes to all.

In our case, the syntax for running was:

bash NVIDIA-Linux-x86_64-460.67.run

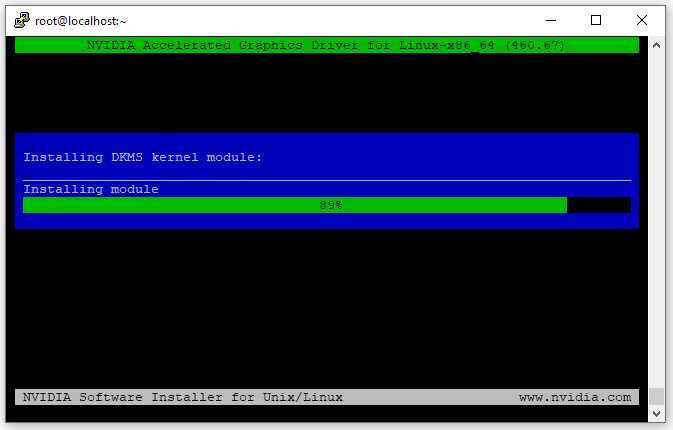

It looks like this:

Checking the driver again, this time we see it’s installed:

description: VGA compatible controller product: GP102 [GeForce GTX 1080 Ti] vendor: NVIDIA Corporation physical id: 0 bus info: pci@0000:82:00.0 version: a1 width: 64 bits clock: 33MHz capabilities: pm msi pciexpress vga_controller bus_master cap_list rom configuration: driver=nvidia latency=0 resources: iomemory:387f0-387ef iomemory:387f0-387ef irq:34 memory:f8000000-f8ffffff memory:387fc0000000-387fcfffffff memory:387fd0000000-387fd1ffffff ioport:e000(size=128) memory:f9000000-f907ffff

Installing Tesla drivers

For any other drivers that are not Geforce, download the Geforce drivers and install them regardless, this is what I got from a Tesla card on Amazon AWS:

description: 3D controller product: TU104GL [Tesla T4] vendor: NVIDIA Corporation physical id: 1e bus info: pci@0000:00:1e.0 version: a1 width: 64 bits clock: 33MHz capabilities: pm pciexpress msix bus_master cap_list configuration: driver=nvidia latency=0 resources: irq:10 memory:fd000000-fdffffff memory:e0000000-efffffff memory:f0000000-f1ffffff

Kernel headers error

If you got a similar error: our kernel headers for kernel 3.10.0-1062.12.1.el7.x86_64 cannot be found at

Make sure to update the system: yum update

And reboot, the reason is that the kernel headers mismatch the kernel

Installing Python 3.6 on CentOS 7

Run:

yum install python3 python3-devel

Upgrading PIP3

Run:

pip3 install --upgrade pip

Installing and compiling Anaconda, Torch and Cuda on CentOS 7

Run:

-

conda install -c anaconda cudatoolkit

-

conda install pytorch torchvision torchaudio cudatoolkit=11.0 -c pytorch -c conda-forge

Download the GPT-NEO training package

Run:

-

git clone https://github.com/Xirider/finetune-gpt2xl.git

-

chmod -R 777 finetune-gpt2xl/

-

cd finetune-gpt2xl

-

pip3 install -r requirements.txt

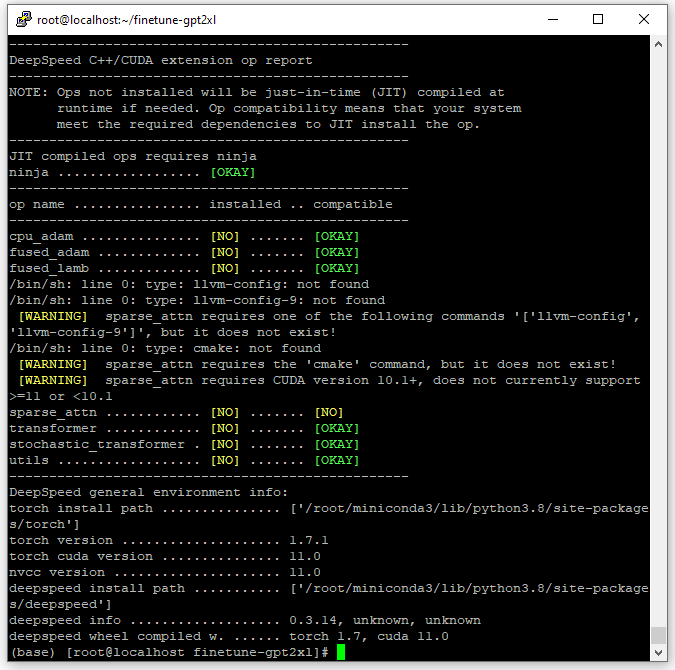

Checking Deepspeed is configured correctly to train GPT

Deepspeed is compiled after we installed it, so we need to make sure the Cuda version matches. In case it doesn’t, we will need to reinstall Deepspeed.

Run:

ds_report

If this doesn’t work, you can try:

python3 -m deepspeed.env_report

This is the report we got:

Alternative way

I keep coming to this guide to follow the steps to setup our AI training machines and every few months something breaks, so in our latest install on Dec 2021 we had to run this after installing everything (it’s possible you can skip the entire Conda part):

pip3 install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

Doing the actual GPT-NEO model finetuning

Now you should follow the steps in the guide we used to do the finetuning. In our case, the server had just 64GB of memory, and we had to reduce the batch rate. If there’s not enough memory, the error will look like this:

allocate memory: you tried to allocate 10605230080 bytes

CPUAdam

With the Geforce RTX 3090, we got some weird errors with Adam:

AttributeError: 'DeepSpeedCPUAdam' object has no attribute 'ds_opt_adam'

The solution was to follow this tip: https://github.com/Xirider/finetune-gpt2xl/issues/3#issue-850573396

Issues with Pandas

There was an update for the Pandas package that broke the training process, the solution was to downgrade it:

pip3 install Pandas==1.2.5

Open source issues

I have revised this article number of times because the packages changed and the install syntax was changed. It’s possible that in the future something breaks again, if it does, write in the comments.

Summary

I hope this guide saved you time to get the finetuning running.

EleutherAI said they should have a large model between August and the end of the year, I look forward to it.